Clik here to view.

The Ann Arbor Public Schools Board of Education discussed at Wednesday's Committee of the Whole meeting what this year's process would be for evaluating Superintendent Patricia Green.

Melanie Maxwell | AnnArbor.com file photo

Previous coverage:

The Ann Arbor Board of Education identified Wednesday four student growth measures and a goal-inspired rubric it will use to evaluate Superintendent Patricia Green in June.

The board discussion also included community surveys and what information it is obligated to make public.

The discussion on how to modify the board's superintendent evaluation process to meet new state requirements was sandwiched Wednesday between two closed, executive sessions to informally review Green, who is in her second year with the district.

The board started its executive session at 5:30 p.m. and recessed a few minutes after 7 p.m. to begin the scheduled Committee of the Whole meeting. The board then re-entered closed session at about 9:20 p.m. for about another hour.

Starting in the 2013-14 academic year, Michigan law will require 25 percent of superintendent evaluations to be based on student growth and achievement data. Five percent of the performance evaluations must be based on student attendance. And school boards will be required to establish some way of qualitatively ranking their sole employees on a scale of "ineffective" or "minimally ineffective" to "effective" and "highly effective."

Another 10 percent of a superintendent's evaluation, per state law, has to do with ensuring the district is implementing new effectiveness measures for teacher evaluations, which are being fleshed out by the state right now, said Board Vice President Christine Stead, who led Ann Arbor's evaluation discussion Wednesday.

She said Ann Arbor Public Schools is meeting this obligation, and first began piloting a Charlotte Danielson-based model for teacher evaluations last fall. This model is being used district-wide this year, Green added. The Danielson model is one of four evaluation methods the state currently is piloting as well, for its statewide teacher evaluation system, coming in 2014.

While the board waits for more guidance on what will be expected of superintendent evaluations, there are a few pieces that are known, such as school boards will need four measurable areas for configuring superintendents' student-growth ratings and school boards have a lot of flexibility, at least for now, in whether they use national, state or local assessments or some other objective student growth data, said David Comsa, AAPS deputy superintendent of human resources and legal services.

Stead said student grade point averages, for example, could be considered subjective.

Green made one suggestion to the board regarding the student-growth piece, and that was to make sure her growth measurements align with the district's achievement and discipline gap data.

"Those are the measures we are monitoring very significantly and are looking at our subgroups where we have been focusing on the disproportionality," Green said, adding most employees in the district are being held accountable for closing the achievement and discipline gaps. "If the metric for my evaluation is different than what I'm holding my staff accountable for, they're holding principals accountable for and teachers are being held accountable for then it doesn't make much sense.

Clik here to view.

AAPS Superintendent Patricia Green

Stead said this was a good point.

Closing the discipline and achievement gaps at AAPS are high priorities on the superintendent's list of goals and objectives. The term "achievement gap" refers to the test score disparity that exists between Caucasian students and their African American, Hispanic, special education and economically disadvantaged peers. Caucasian students typically outperform their peers on standardized tests. But under Green's direction, AAPS has launched a plan to improve minority students and other low-performers' test scores.

Similarly, more African American, Hispanic, special education and low-income students receive out-of-school suspensions and expulsions than Caucasian students at AAPS. These achievement and discipline gap findings at AAPS mirror national trends and statistics.

- Read about the achievement and discipline gap plans.

The four student growth measurements the board agreed upon for its superintendent evaluation were: Michigan Educational Assessment Program (MEAP) data, Michigan Merit Exam (MME) data, graduation rates and Northwest Evaluation Association (NWEA) data. This data will be looked at district wide and broken down into subgroups based on student demographics and grade level when appropriate.

These data — MEAP, MME, NWEA and graduation rates — all were included in the administration's student achievement report to the board in October, which is partially why these measures were chosen.

The board also will be develop a rubric for this year's evaluation. The trustees discussed using something similar to the tool the Michigan Association of Schools Boards published. But ultimately, board members were not in favor of this.

The MASB developed a sample evaluation form in 2011 after the new law was passed that school boards were encouraged to use. It employs a point system (1 point for "ineffective" and 4 points for "highly effective") to score a superintendent in 11 categories, such as staff relationships, business and finance, educational leadership, personal qualities and community relations.

Each category is weighted to meet the state-mandated percentages for student achievement data. A total score is tallied by each trustee.

An average of all the trustees' scores would equate to an overall rating for the superintendent. A score of 85 to 100 percent is deemed highly effective, 68 to 84 percent is effective, 50 to 67 percent is minimally effective and less than 50 percent is ineffective.

Green asked Wednesday if board members knew of any school districts in Michigan that were using the MASB's evaluation model.

"I don't know of any school district using this form, but I wanted to know if you do," she said. "I've been talking with my colleagues in Washtenaw County about their evaluations and I know they are not using this."

Stead said she was not aware of any districts either. Board President Deb Mexicotte said last year, she worked her way through the MASB tool as a "thought exercise" in preparing for the board's discussion-based evaluation.

"I thought there were some good things on there; I thought there were some humorous things And then there were those things I thought 'Gosh, I don't know why they'd put this on here,'" she said.

The board decided to use the superintendent's goals that were established in August to shape the evaluation rubric and categories. Stead said she would draft something and send it to other board members for input on what each category should be weighted or worth in the evaluation — excluding those categories, like student achievement, that already have state-mandated percentages. Discipline data would be factored into one of the to-be-determined categories.

Green's goals for the current school year were broken down into five categories:

- Strategic planning

- Budget, technology, the discipline gap and accountability

- Community outreach

- Curriculum, student achievement and student growth

- Personnel management

The board also agreed to stick with its practice of issuing a statement summarizing the board's concerns and opinions about the superintendent's performance at the end of its discussion-driven evaluation.

Comsa said for this school year, the board legally can continue doing what it always has done with the summation statement. However, Mexicotte added next year the state may require the board to publicly report some type of quantifiable score or effectiveness rating.

The board reached consensus that it would make its new evaluation rubric available to the public this year, so people can see how the board arrives at its summation statement, what topics are considered and discussed and how those items are weighted. But Comsa advised the trustees that their individual or aggregate scores would not necessarily have to be produced in a Freedom of Information Act request. He told board members the individual notes they take going into the discussion-based evaluation are not public documents.

The community feedback mechanism will remain the same as 2012 for evaluating the superintendent in June. Each of the seven school board members can select a handful of people whose input they feel could be valuable to the discussion. The superintendent also can suggest people.

The suggestions then are sent to Mexicotte, and she mails out a survey on behalf of the board to those community members the trustees recommend. The district destroys the mailing list and the returned surveys at the end of the process to ensure community confidentiality.

Those asked to complete the survey often include teachers, building principals, union leaders and other vocal members of the public. Last year, the board distributed 90 surveys and received 57 back, according to previous reports.

When the surveys are returned, the one or two trustees in charge of managing the superintendent review open them and prepare a summary for the whole board to consider throughout its conversation the night of the evaluation.

Mexicotte described the entire evaluation process as a math equation: the required metrics, superintendent goals/objectives and the weighting + the narrative/discussion, community feedback and individual trustees' judgments and observations = the evaluation statement that is produced the night of the formal review.

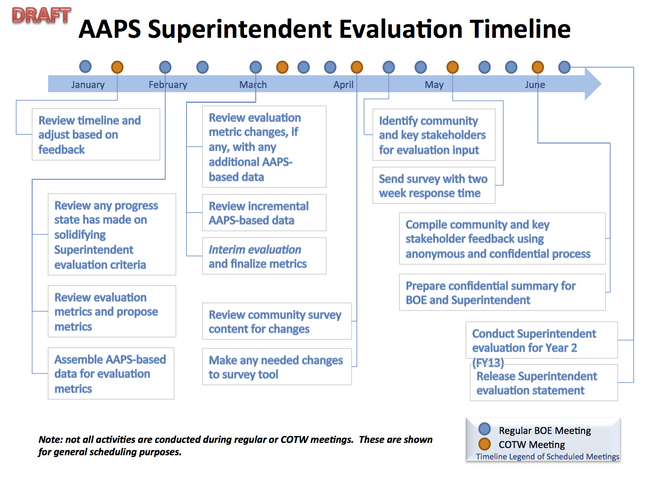

The following is a draft of the board's superintendent evaluation timeline:

Clik here to view.

Danielle Arndt covers K-12 education for AnnArbor.com. Follow her on Twitter @DanielleArndt or email her at daniellearndt@annarbor.com.